The rise of generative AI (GenAI) over the past two years has driven a whirlwind of innovation and a massive surge in demand from enterprises worldwide to utilize this transformative technology. However, with this drive for rapid innovation comes increased risks, as the pressure to build quickly often leads to cutting corners around security. Additionally, adversaries are now using GenAI to scale their malicious activities, making attacks more prevalent and potentially more damaging than ever before.

To address these challenges, securing enterprise applications that utilize GenAI requires implementing fundamental security controls to protect the backbone infrastructure. This infrastructure powers the applications and has access to vast amounts of enterprise data the applications rely on. By ensuring these security fundamentals are in place, enterprises can trust these applications as they are deployed across the organization.

The Next GenAI Evolution: The Emergence of AI Agents

The evolution of GenAI is rapidly transitioning from being a content creation engine and a co-pilot for humans to becoming autonomous agents capable of making decisions and performing actions on our behalf. Although AI agents are not widely used in major production environments today, analysts predict their rapid adoption in the near future due to the enormous benefits they bring to organizations. This shift introduces significant security challenges, particularly in managing machine identities (AI agents) that may not always perform predictably.

As AI agents become more prevalent, enterprises will face the complexity of securing these identities at scale, potentially involving thousands or even millions of agents running concurrently. Key security considerations include authenticating AI agents to various systems (and to other AI agents), managing and limiting their access and ensuring their lifecycle is controlled to prevent rogue agents from retaining unnecessary access. Additionally, it’s crucial to verify that AI agents are performing their intended functions within the services they are designed to support.

As this technology continues to evolve, more insights will emerge on best practices for integrating AI agents into enterprise systems securely. However, what is clear is that securing the backend infrastructure powering your GenAI implementations will be a prerequisite for running AI agents on a platform that is inherently secure.

Addressing Emerging Security Challenges

The first thing that may come to mind when considering the security of GenAI is the need to secure these new and exciting technologies as they emerge in the real world. These are significant challenges due to the rapid pace of change, the introduction of new types of services and capabilities and ongoing innovation.

As with any period of significant innovation, security controls and practices must be adapted. In some cases, innovation may be required to address challenges that didn’t exist before. The rise of GenAI is no exception, bringing unique security concerns that demand continuous innovation. One example is protecting GenAI-powered applications from attacks such as prompt injection. These attacks can cause the application to expose sensitive data or perform unintended actions.

However, it’s important to remember that, like any application, GenAI-powered apps are built upon underlying systems and databases. Enterprise applications using GenAI will be vulnerable to potentially devastating attacks if this backbone infrastructure is not secured appropriately. Attackers can leak large swaths of sensitive data, poison data, manipulate the AI model or disrupt the system’s availability and customer experience.

Many identities require access to the backend infrastructure, each representing a high level of risk and likely targets for attackers. Identity-related breaches remain the leading cause of cyberattacks, giving attackers unauthorized access to sensitive systems and data. Recognizing these identities, their roles, access requirements and securing them are critical priorities. Fortunately, the measures to secure identities within this backbone infrastructure are the same identity security best practices you likely use to protect other environments, particularly your cloud infrastructure, where most GenAI components will be deployed.

Inside Enterprise GenAI-powered Applications

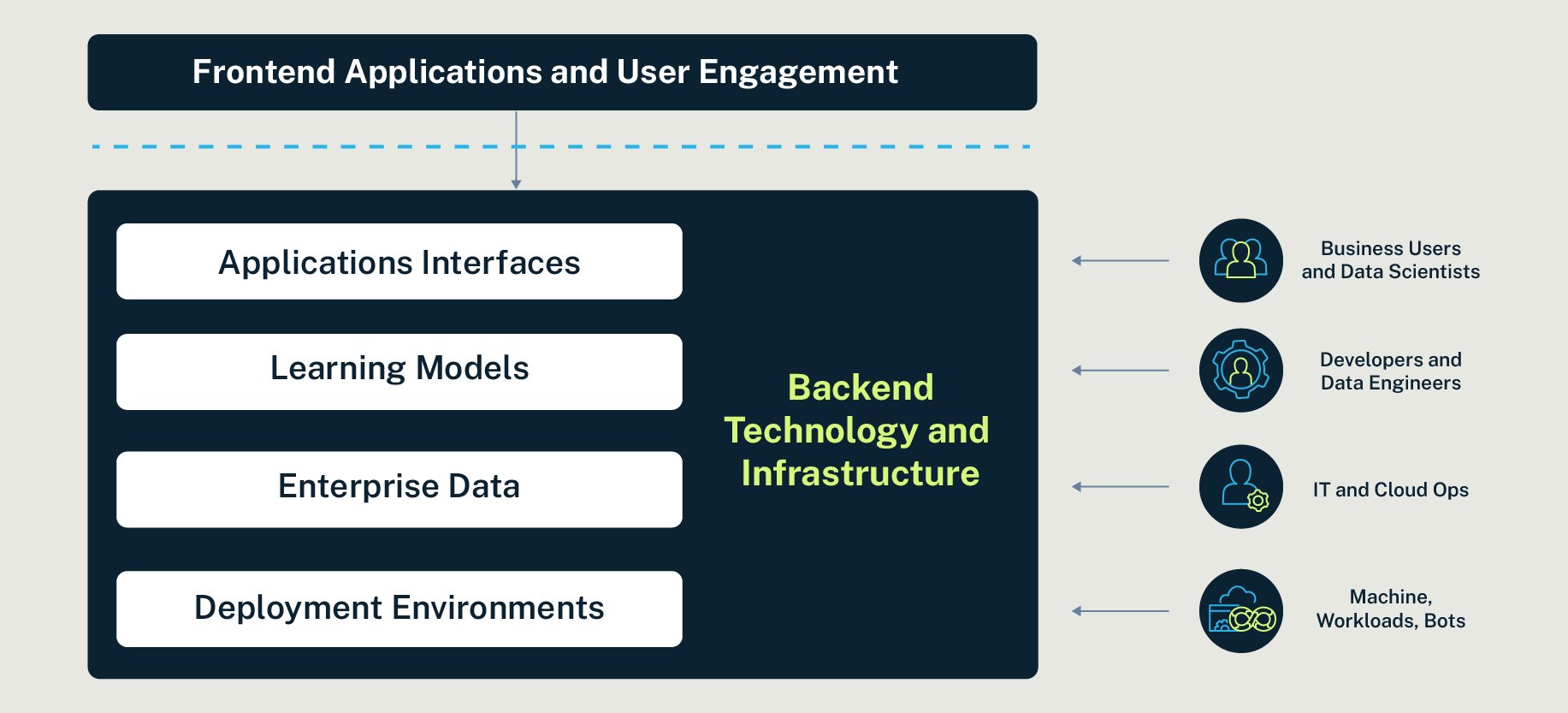

Before diving into the recommended security controls and approach, let’s briefly review some of the key components and building blocks of GenAI-powered applications, along with the identities that interact with these components. This overview isn’t intended to be comprehensive but includes some essential approaches and areas to consider, especially for components and services typically hosted or managed by your organization.

Here are some critical components to consider:

- Application interfaces: APIs act as gateways for applications and users to interact with GenAI systems. Securing these interfaces is critical to prevent unauthorized access and ensure only legitimate requests are processed.

- Learning models and LLMs: These algorithms analyze data to identify patterns and make predictions or decisions based on that data. They are trained on vast amounts of data to develop powerful applications. Most enterprises will utilize one or multiple leading LLMs from leading global players such as OpenAI, Google and Meta. Public data trains the LLMs, but to develop high-performing, cutting-edge applications, you must also train them on the unique data that gives your enterprise a competitive edge.

- Enterprise data: Data fuels AI, driving machine learning algorithms and insights. Leveraging internal enterprise data is key to developing unique and impactful enterprise GenAI-powered applications. Protecting sensitive and confidential information from leaks or loss is a top concern and a prerequisite for rolling out these applications.

- Deployment environments: Whether on-premises or in the cloud, secure the environments where you deploy AI applications with stringent identity security measures.

Ultimately, technologies, services and databases in your cloud environment or datacentre form the backend infrastructure behind GenAI-powered applications, and you must protect them like all other systems.

Implementing robust identity security measures for each of these elements is essential to mitigate risks and ensure the integrity of GenAI applications.

Implementing Robust Identity Security Controls

Various identities have high levels of privilege to the critical infrastructure powering enterprise GenAI applications. Should their identity be compromised and authentication bypassed, it leaves a huge attack surface and multiple avenues of entry to a potential attacker. These privileged users go beyond the IT and cloud operations teams that build and manage the infrastructure and access. They include (and are not limited to):

- Business users assigned to analyze trends in the data, input their expertise and provide validation.

- Data scientists develop models, prepare datasets and analyze the data.

- Developers and DevOps engineers who manage the databases and, together with IT teams, are responsible for building and scaling the backend infrastructure, either directly or through automated scripts.

A hijacked developer identity could grant an attacker privileged read and write access to sensitive code repositories, core cloud infrastructure administration functions and confidential enterprise data.

We must also remember that this GenAI backbone is sprawling with machine identities that allow systems, applications and scripts to access resources, access and process data, build, manage and scale infrastructure, implement access and security controls and more. As with most modern IT and cloud environments, you can assume there will be more machine than human identities.

With such high stakes, following a Zero Trust, assume breach approach is key. Security controls must go beyond authentication and basic role-based access control (RBAC), ensuring a compromised account doesn’t leave a large and vulnerable attack surface.

Consider the following identity security controls for all the types of identities outlined above:

- Enforcing strong adaptive MFA for all user access.

- Securing access to, auditing use and regularly rotating credentials, keys, certificates and secrets used by humans and backend applications or scripts. Ensure that API keys or tokens that cannot be automatically rotated are not permanently assigned and that only the minimum necessary systems and services are exposed.

- Implementing zero standing privileges (ZSP) can help ensure that users have no permanent access rights and can only access data and assume specific roles when necessary. In areas where ZSP is not an option, aim to implement least privilege access to minimize the attack surface in case of user compromise.

- Isolating and auditing sessions for all users who access the GenAI backbone components.

- Centrally monitoring all user behaviour for forensics, audit and compliance. Log and monitor any changes.

Balancing Security and Usability in GenAI Projects

When planning your approach to implementing security and privilege controls, it’s crucial to recognize that GenAI-related projects will likely be highly visible within the organization. Development teams and corporate initiatives may view security controls as inhibitors in these scenarios. The complexity increases as you need to secure a diversified group of identities, each requiring different levels of access and using various tools and interfaces. That’s why the controls applied must be scalable and sympathetic to users’ experience and expectations while ensuring they don’t negatively impact productivity and performance.

Yuval Moss, CyberArk’s vice president of solutions for Global Strategic Partners, joined the company at its founding.